Zero to E2E Test Generator with Copilot Spaces

Making Machines Dream Right #2

After one too many times manually testing my app’s asset creation, update, and delete flow, I decided it was finally time to build automated End-to-End tests.

The way I’ve done this before is to export a Postman collection of all routes and run them with Newman in the CLI. This can be automated in a pipeline or integrated into Git workflows.

Without an existing set of Postman calls, I wondered if I could get AI to do the heavy lifting. Turns out the answer was yes!

These were the steps:

Install test runner into project

Create execution script

Execute my first successful test

Use AI to rapidly achieve full test coverage

Step 1 was simple enough.

Step 2 required a bit of a headache, as this script had to perform a series of operations including:

Authenticate

Store credentials

Load environment variables

Retrieve pre-signed URL

Upload item to S3

Perform mutation with id from S3

Since my script file contains a lot of aspects unique to my implementation I won’t bother running through it here. Once that was all working, it was time to create my first E2E test.

GitHub Copilot Spaces has become my favorite AI tool for its ability to engineer context environments that excel at producing structured outputs. Once proper context is set, you never have to paste code into the editor again. Just select the right Space for what you want to work on, and it already knows most of what you’re looking for.

These were the files I added to my Space:

schema.graphql– definition of API actions and required argumentsService files that consumed the API

Resolver files that run to support the calls

At the point that my Space started to quickly and consistently return working tests the context allotment was at 75%.

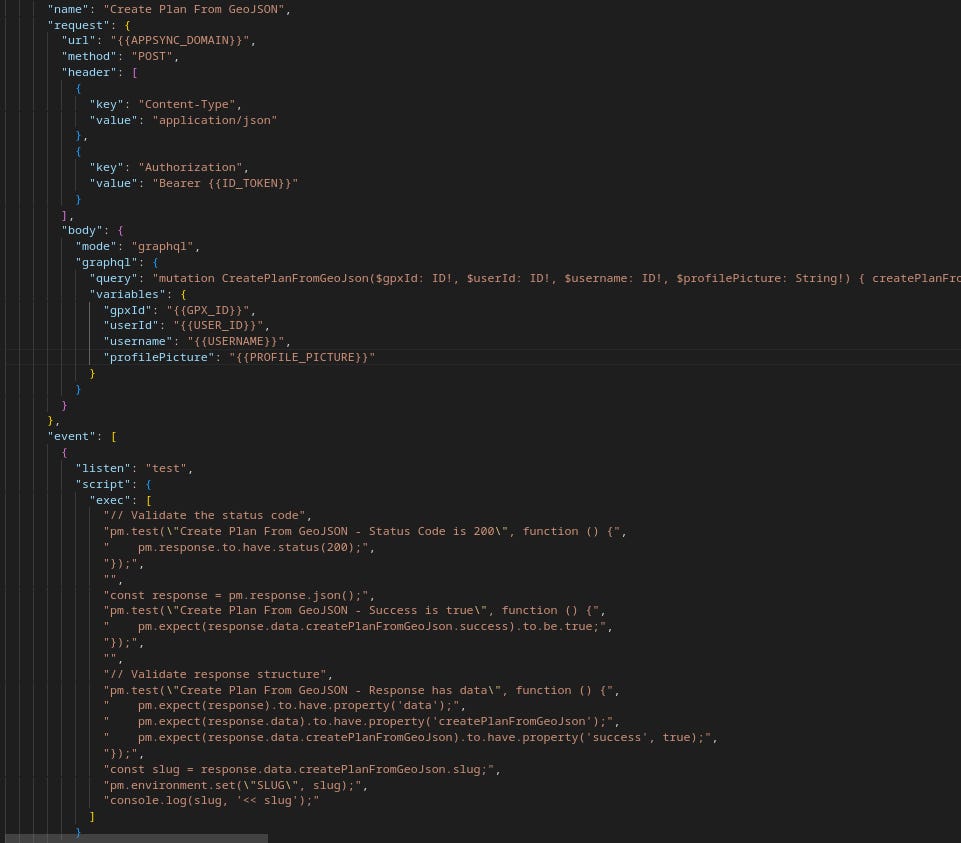

What came out looked like this: readable, but un-typeable. Since my workflow didn’t include actually creating working calls inside Postman, being able to produce this file directly is a huge win and eliminates the need to directly manage tests.

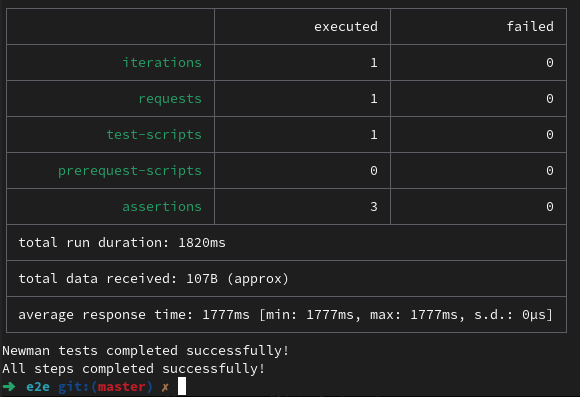

After a few modifications, it ran! And the tests passed.

Step 3 complete.

What I’ve learned with Spaces is that once you get to this point with something that works, you need to immediately add it back into the Space. With the semantic blueprint to success in hand, you can trust that the AI is going to dream good dreams.

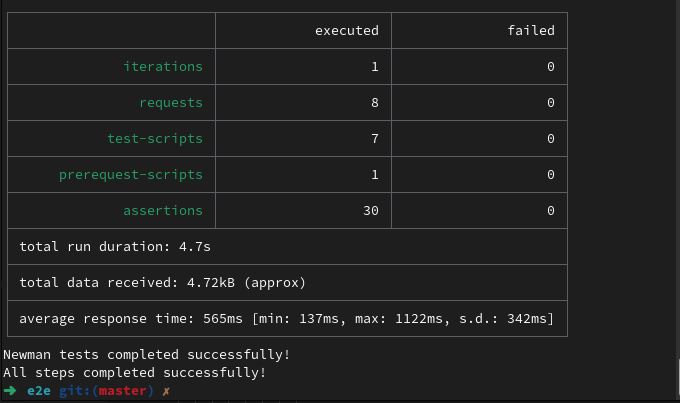

Step 4 is where the fun happens! At this point, it was trivial for the Space to get me to full test coverage. Watching the tests go from 3 to 30 in a matter of minutes is pretty wild for a process that previously would have taken days.

After completion, the context includes both the existing tests as well as the API spec. As the API undergoes development, these changes are updated in the Space, and new tests are produced with ease.

Being able to create engineered collections of task-relevant context has dramatically improved both the quality of my dev time and the range of solutions that AI can provide. It's my experience that this is more important than the correct prompt, model specifications, or even which AI tool you choose.

In future entries I'll be sharing more about the successes I've had using Copilot Spaces. For anyone looking to replicate this success: start small with one working example, add it back to your context immediately, then scale from there. The compound effect of good context is remarkable.